In this part 2 of a 3 part series (part 1 here), I am going to introduce the non-RT RIC. Before jumping into the non-RT RIC capabilities, let's evaluate the limitations of current RAN infrastructures and future needs.

Rapid traffic growth and multiple frequency bands utilized in a commercial network make it challenging to steer the traffic in a balanced distribution.

Current controls are limited to:

- Adjusting the cell re-selection and handover parameters

- Modifying load calculations and cell priorities

- RRM (Radio Resource Management) features in the existing cellular network that are all cell-centric

- Base stations based on traditional control strategies treat all UEs in a similar way and are usually focused on average cell-centric performance, rather than being UE-centric

The current semi-static QoS framework does not efficiently satisfy diversified quality of experience (QoE) requirements such as cloud VR or video applications. The framework is limited to looking at previous movement patterns to reduce the number of handovers.

The non-RT RIC, which is part of O-RAN Service Management & Orchestration (SMO) layer, addresses these current limitations and supports various use cases. It allows operators to flexibly configure the desired optimization policies, utilize the right performance criteria, and leverage machine learning to enable intelligent and proactive traffic control.

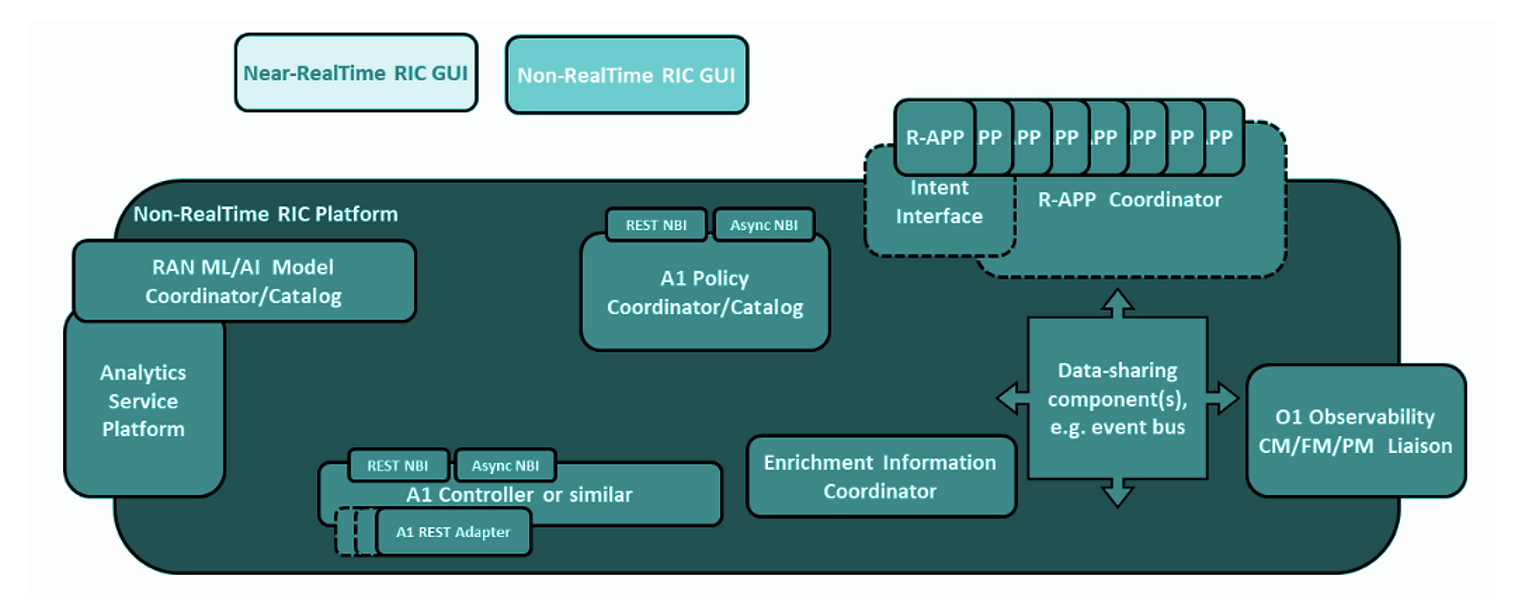

The following figure shows a functional view of non-RT RIC platform.

As described in O-RAN architecture, the non-RT RIC will communicate with near-RealTime RIC element via the A1 interface. Using the A1 interface the non-RT RIC will perform actions such as:

- Policy Management: facilitate the provisioning of policies for individual UEs or groups of UEs

- Monitoring: monitor and provide basic feedback on the policy state from near-RT RICs

- Enrichment Information: provide enrichment information as required by near-RT RICs

- AI/Ml updates: facilitate ML model training, distribution and inference in cooperation with the near-RT RICs

Data Collection

The Non-RT RIC platform within the SMO collects FCAPS (fault, configuration, accounting, performance, security, management) data related to RAN elements over the O1 interface. Examples of collected items:

- Events

- Performance data.

- Cell load statistics

- UE level radio information, connection and mobility/handover statistics.

- Various KPI metrics from CU/DU/RU nodes

Derived Analytics Data

The collected data is used to derive analytics data. Derived analytics data includes measurement counters and KPIs that appropriately get aggregated by cell, QOS type, slice, etc. Examples of derived data analytics:

- Measurement reports with RSRP/RSRQ/CQI information for serving and neighboring cells

- UE connection and mobility/handover statistics with indication of successful and failed handovers

- Cell load statistics such as information in the form of number of active users or connections, number of scheduled active users, utilization of PRB and CCE

- Per user performance statistics such as PDCP throughput, RLC or MAC layer latency

The non-RT RIC can provide data driven operations to various RAN optimizations. AI-enabled policies and ML-based models generate messages in non-RT RIC that are conveyed to the near-RT RIC. The core algorithm of non-RT RIC is owned and deployed by network operators.

These algorithms provides the capability to modify the RAN behavior based by deployment of different models optimized for individual operator policies and optimization objectives.

Following are few such examples and applicable scenarios:

- Adapt RRM control for diversified scenarios and optimization objectives.

- Capabilities to predict network and UE performance.

- Optimal traffic management with improved response times by selecting the right set of UEs for control action. This results in an optimal system and UE performance with increased throughput and reduced handover failures.

- The O1/EI data collection is used for offline training of models, as well as for generating A1 policies for V2X handover optimization like handover prediction and detection.

- The non-RT RIC can influence how RAN resources are allocated to different users through a QoS target statement in an A1 policy.

In this next post we will see the use cases where the non-RT RIC can play a pivotal role to optimize the RAN resources.

%20%3A%20Data%20driven%20RAN%20optimizer.webp)

.jpeg)

%20Orchestration%20on%20Public%20Clouds.webp)